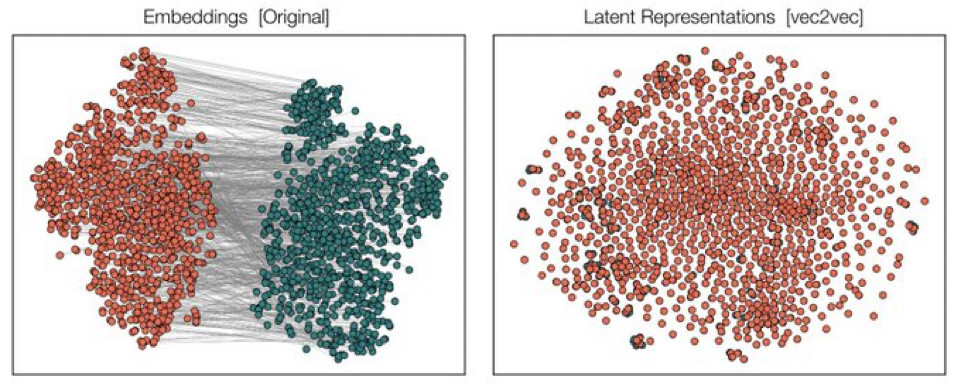

Strong Platonic Representation Hypothesis

All embedding models, given large enough scale, can be translated between them without paired data

Security implication: Embeddings aren’t encryption, they’re basically plain text

https://arxiv.org/abs/2505.12540

All embedding models, given large enough scale, can be translated between them without paired data

Security implication: Embeddings aren’t encryption, they’re basically plain text

https://arxiv.org/abs/2505.12540

Comments

https://claude.ai/public/artifacts/7eb19d11-32f6-4ea6-900a-e807cfb5af24

From good old 2021: https://www.microsoft.com/en-us/research/blog/privacy-preserving-machine-learning-maintaining-confidentiality-and-preserving-trust/ #dp #privacy

https://arxiv.org/pdf/2404.16847

Claude's analysis

we assumed it was uni-directional, but this result makes me think it’s more of a bi-directional mapping

https://arxiv.org/abs/2310.06816