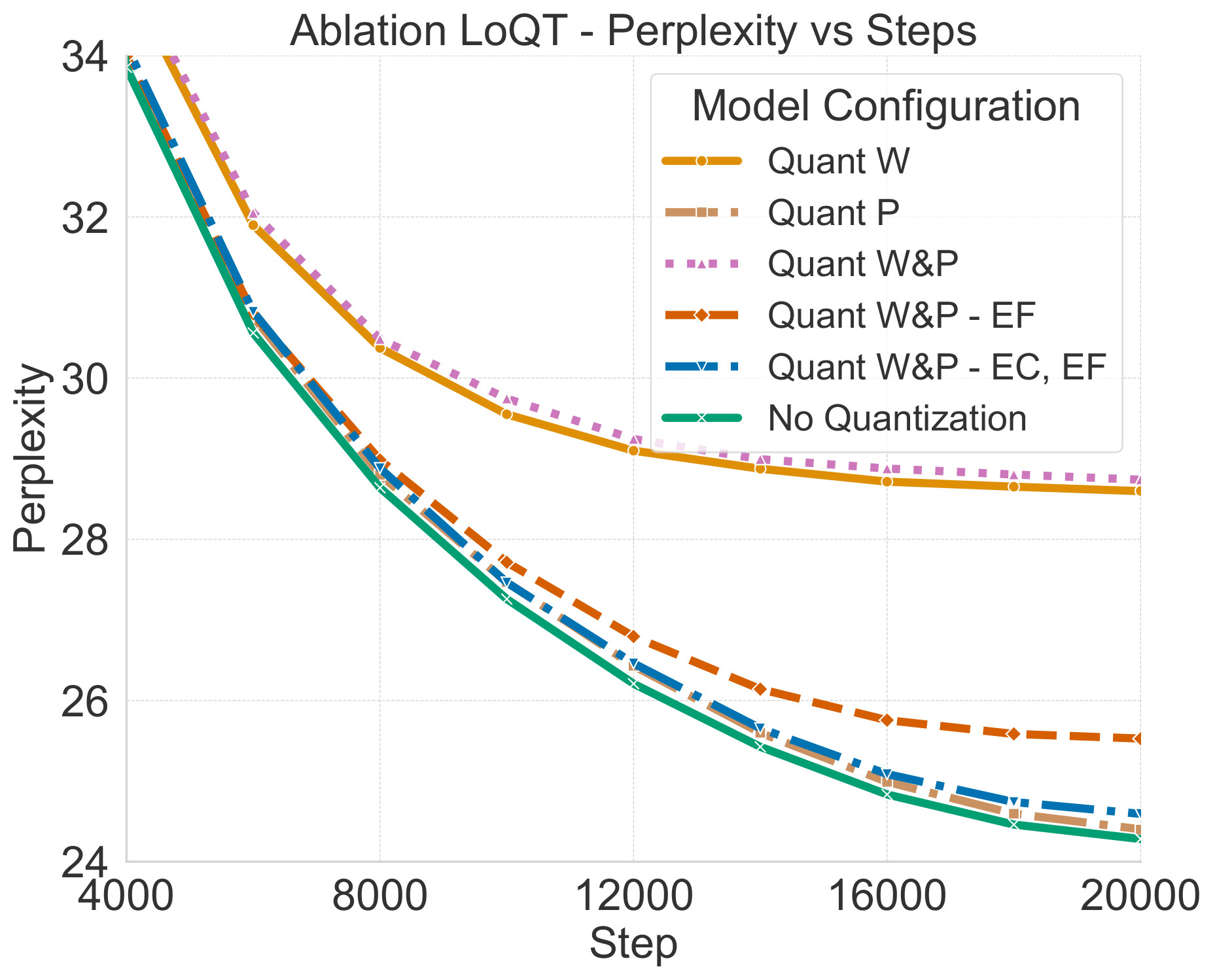

We periodically merge the low-rank adapters into the quantized model over exponentially increasing intervals. After each merge, we reinitialize the adapters and continue training.

We show LoQT works for both LLM pre-training and downstream task adaptation📊.

3/4

We show LoQT works for both LLM pre-training and downstream task adaptation📊.

3/4

Comments

Great collaboration with @mabeto5p, @mjkastoryano, @sergebelongie.bsky.social , @vesteinns.bsky.social

Code: https://github.com/sebulo/LoQT 💻

Paper: https://arxiv.org/abs/2405.16528 📄

This research was funded by @DataScienceDK, and @AiCentreDK and is a collaboration between @DIKU_Institut, @ITUkbh, and @csaudk