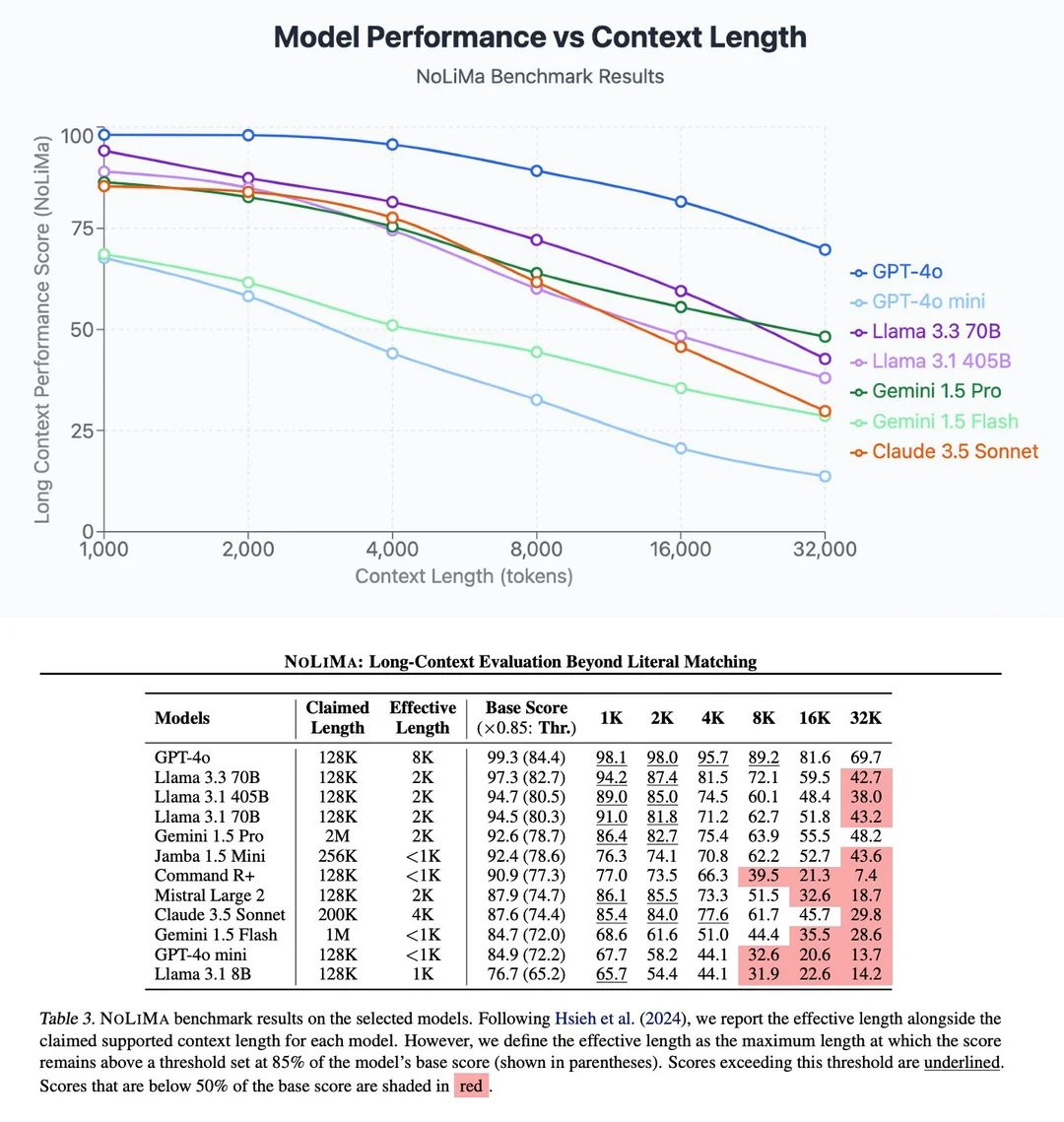

New research measuring exactly how much LLMs loose performance with longer context. I wish we could just dump everything in context, but it just doesn't work.

Link: https://arxiv.org/abs/2502.05167

Link: https://arxiv.org/abs/2502.05167

Comments