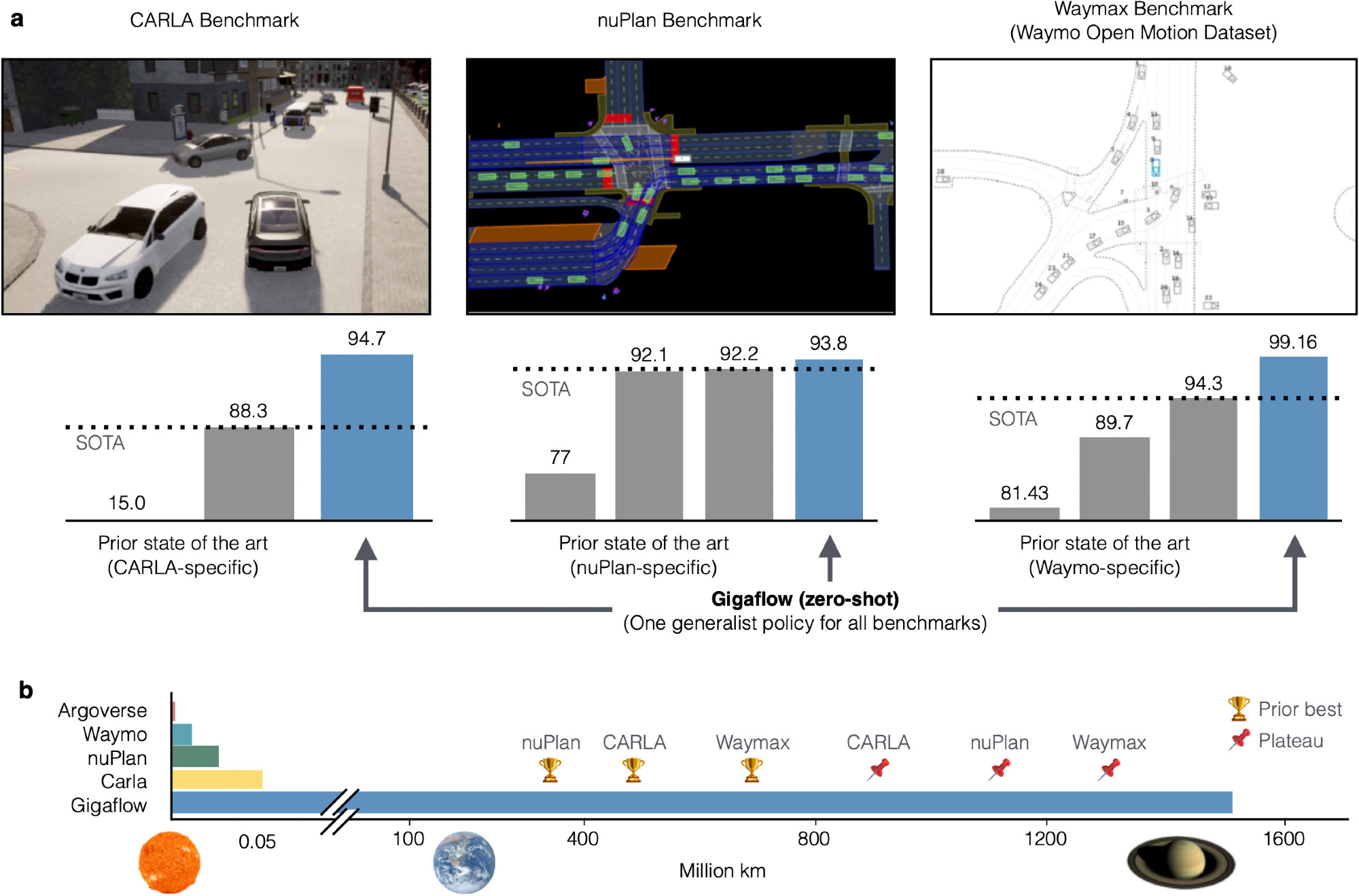

We've built a simulated driving agent that we trained on 1.6 billion km of driving with no human data.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

Comments

And looking fwd to a zero DUI world.

Deep Sets approach for encoding variable nos of agents interesting.

Always wondered how Deep Sets behaves OOD, ie. if there were say far more agents on road than in training (western driver encounters Asian traffic jam), does it saturate?

Love the planets on the bottom plot! 🪐

I'm curious if these benchmarks include hard tests of OOD generalization? Eg driving conditions in eg Mumbai or Hanoi? Seems like it'd be a nice stress test of large scale offline RL (vs online planning)

Could you please help me understand how GPUDrive and GIGAFLOW are connected?

Is GIGAFLOW building upon GPUDrive?

Do you know when/where the supplementary material will be released? (I.e., where can we find the videos?!)

I loved that we did this as a team, each adding something unique but also equally sharing all the grimy work. We all wrote the simulator, we all did the RL, we all jumped on the grenades.

w/ @senerozan.bsky.social @twkillian.bsky.social (+ others who are not on bsky)

Is there a GitHub with demo?

Thanks

(AV systems product manager here )

https://github.com/Emerge-Lab/gpudrive

Some upsides in it, some downsides but similar speed