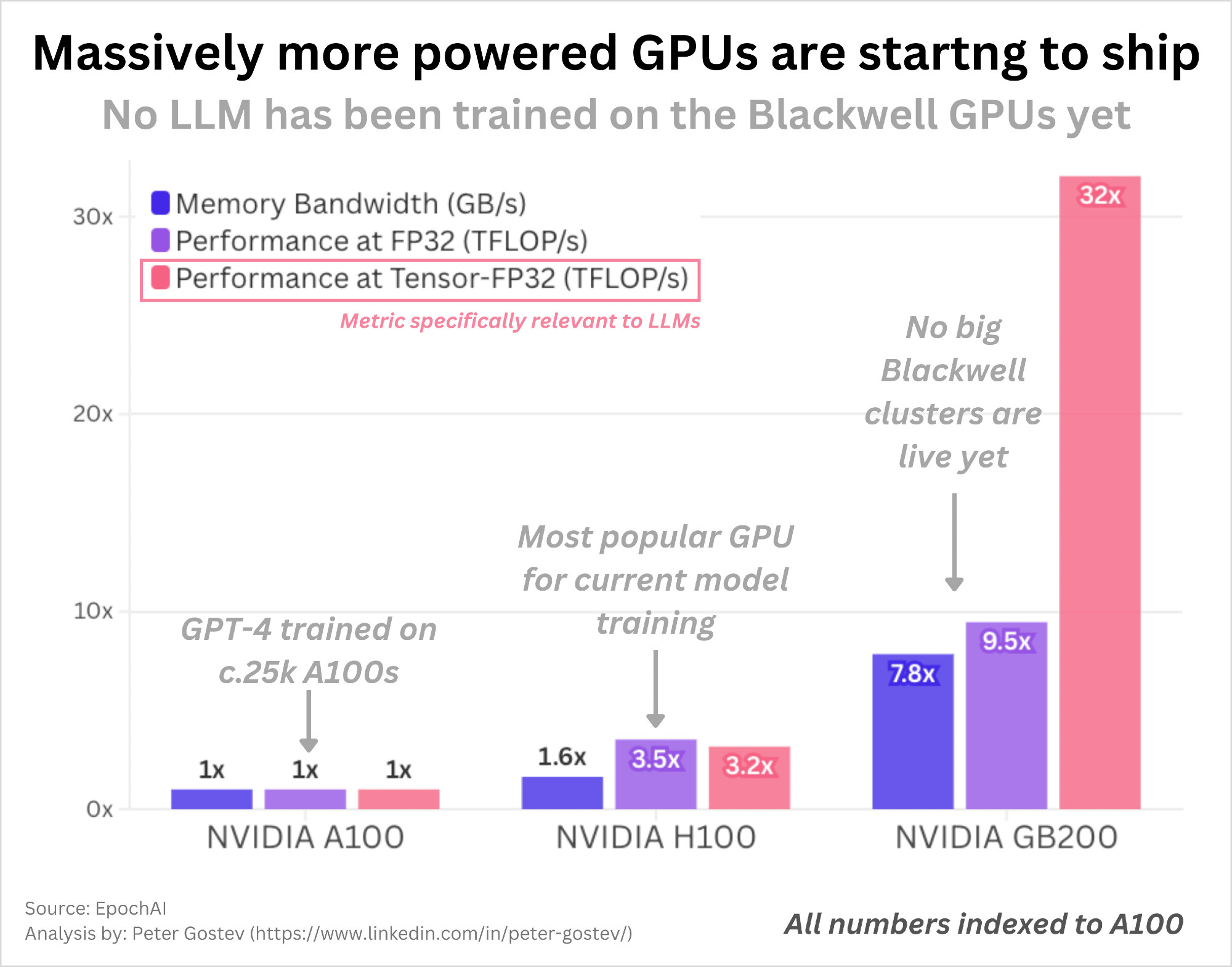

Deep learning isn’t hitting a wall—our compute has been lagging. GPT-4 ran on 4-yr-old A100 GPUs (~25k). GPT-5 likely on ~70k H100s. But Blackwell (GB200) GPUs are 8-32x faster. First clusters are just shipping; in ~12-18 months, expect new LLMs trained on these.

Data: @epochai.bsky.social

Data: @epochai.bsky.social

Comments