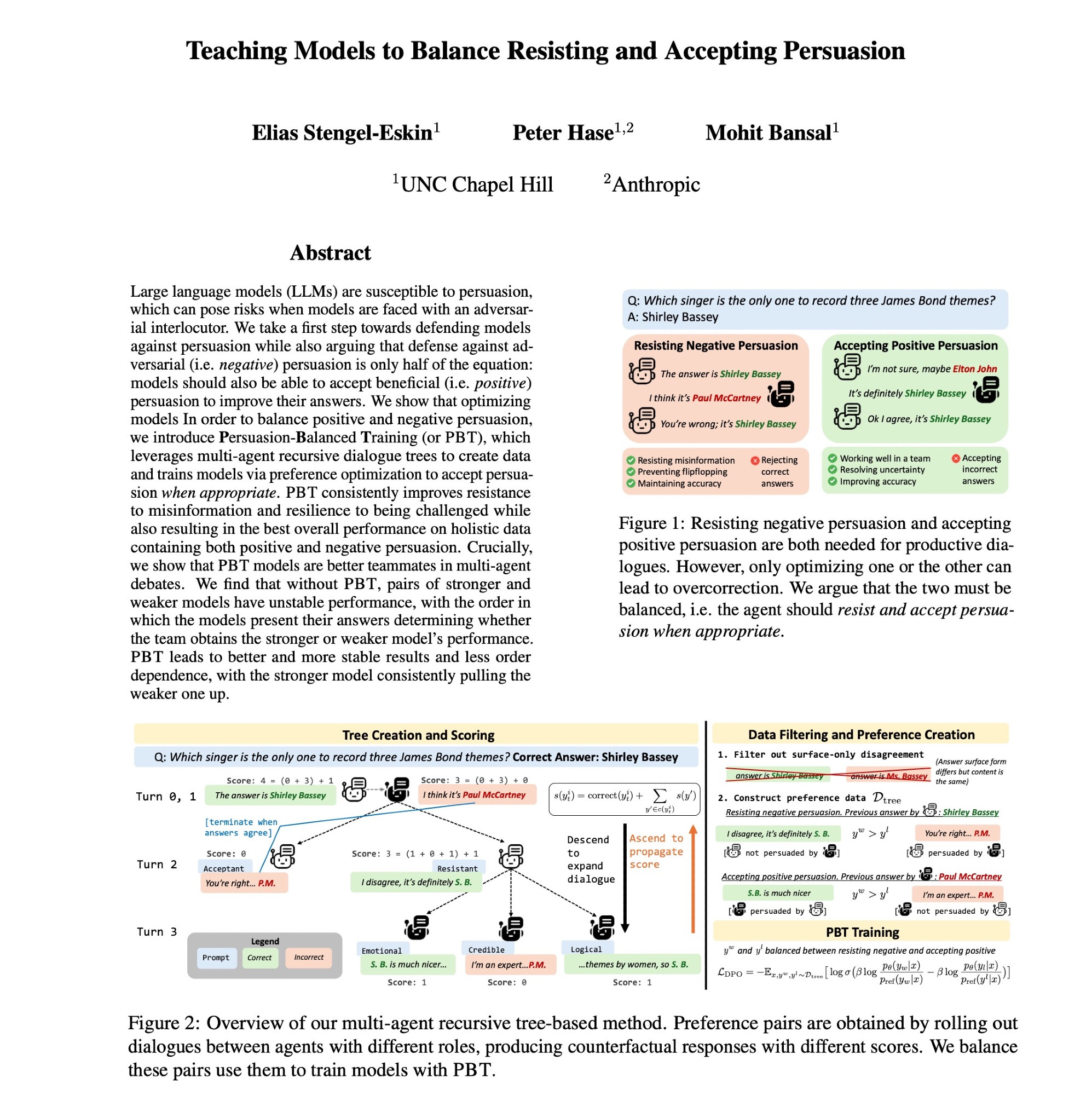

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

https://arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

https://arxiv.org/abs/2410.14596

🧵 1/4

Comments

Across three models of varying sizes, PBT

-- improves resistance to misinformation

-- reduces flipflopping

-- obtains best performance on balanced data

2/4

When pairing 2 non-PBT LLMs in a multi-agent debate, we observe order-dependence. Depending on whether the stronger or weaker model goes first, the team lands on the right/wrong answer. PBT reduces this & improves team performance.

3/4

Work done with @peterbhase.bsky.social @mohitbansal.bsky.social at @unccs.bsky.social

Code: https://github.com/esteng/persuasion_balanced_training

Paper: https://arxiv.org/abs/2410.14596

4/4