We find that it rarely performs proper editing:

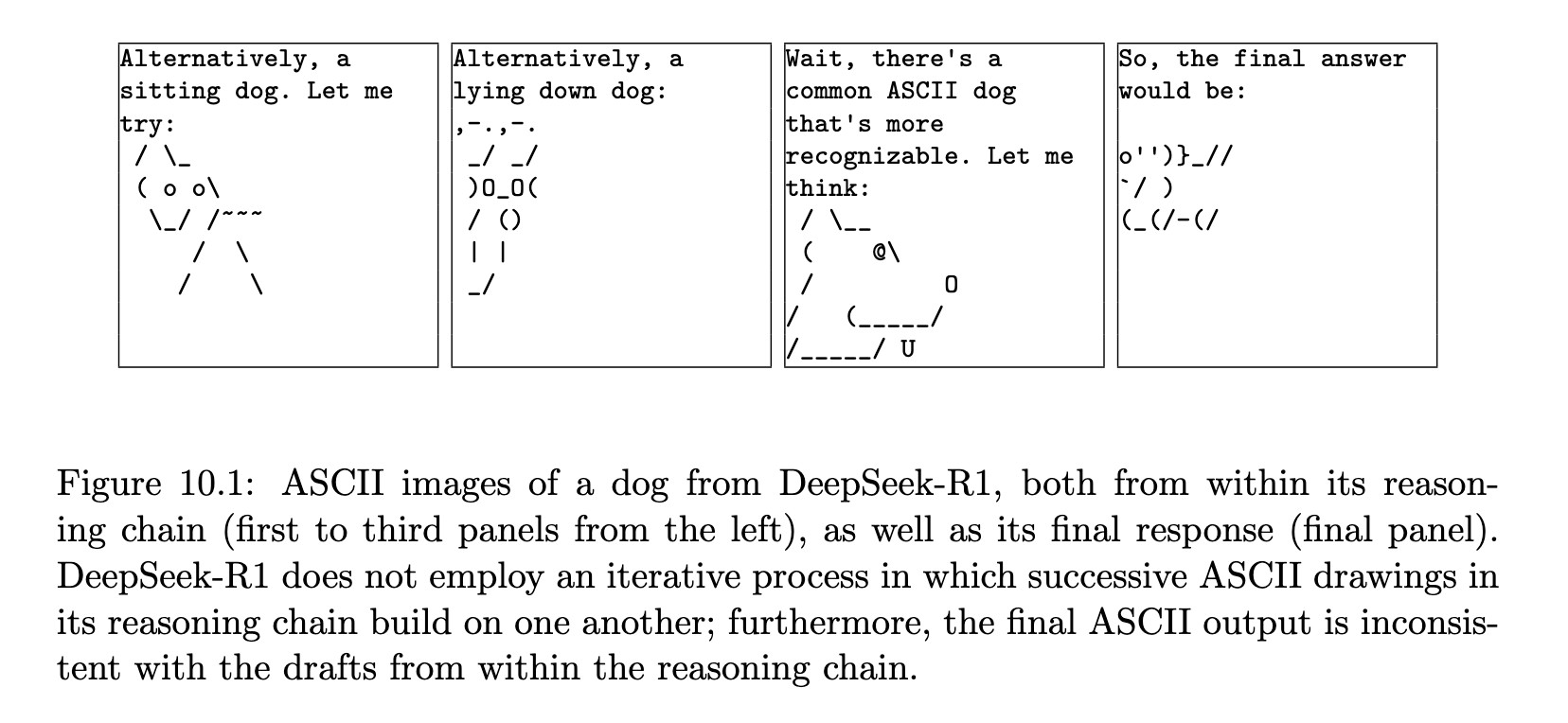

R1 often completely discards its initial drafts, starting from scratch over and over. Similarly, the final output after "" might not be faithful to the drafts from the thinking process

R1 often completely discards its initial drafts, starting from scratch over and over. Similarly, the final output after "

Comments

A more complex task does not necessarily lead to longer reasoning chains.

We ask the model to generate single objects (e.g. dog); and then also object compositions (e.g. half-dog half-shark) as a more complex

However this second task leads to slightly shorter chains!

We find that R1 often "gets lost" in symbolic/math reasoning instead of generating actual drafts of the "video"

Overall:

1. R1 slightly better than V3

2. Yet still many failure modes, especially a lack of iterative refinement