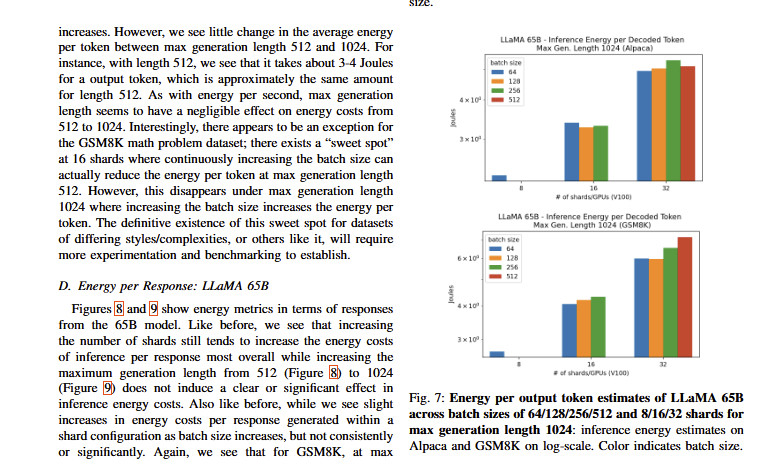

Most of the talk around AI and energy use refer to an older 2020 estimate of GPT-3 energy consumption, but a more recent paper directly measures energy use of Llama 65B as 3-4 joules per decoded token.

So an hour of streaming Netflix is equivalent to 70-90,000 65B tokens. https://arxiv.org/pdf/2310.03003

So an hour of streaming Netflix is equivalent to 70-90,000 65B tokens. https://arxiv.org/pdf/2310.03003

1 / 2

Comments

https://wimvanderbauwhede.codeberg.page/

https://ieeexplore.ieee.org/document/10549890

You have three simultaneous axes of improvements:

* Hardware that does more FLOPS per joule

* Models that do the same work at smaller sizes

* Inference servers that run faster

And, of course, in aggregate AI data centers will have a real environmental impact.

Nowadays we have faster hardware, much smaller models for a given capability, and inference techniques like speculative decoding, all applied multiplicatively to each other. You can't take dated numbers seriously.

Like, we don’t let folk calculate fuel efficiency performance or standards by using their own vehicles.

Or, we don’t let individuals test drug efficacy by testing said drug on themselves.

Lucky it's an open weight model, isn't it? Unlike ChatGPT, Claude, or Gemini. Anyone can buy the hardware & download the weights if they care enough

https://ieeexplore.ieee.org/document/10363447

The article review committee in such journals question everything from the calculations to the hypotheses to the experimentation.

Making experimental data public doesn’t insulate scientific-sounding conclusions from verification.

But I honestly do appreciate your explanation and engagement with my question.

It’s always tricky to draw conclusions from a study that haven’t yet had a neutral third party check its work.

I’m not sure if this is still his “beat” but Liam Kofi Bright (@lastpositivist.bsky.social) has written interesting stuff about peer review. His work is absolutely worth checking out.

https://www.thebsps.org/short-reads/peer-review-bright-heesen/