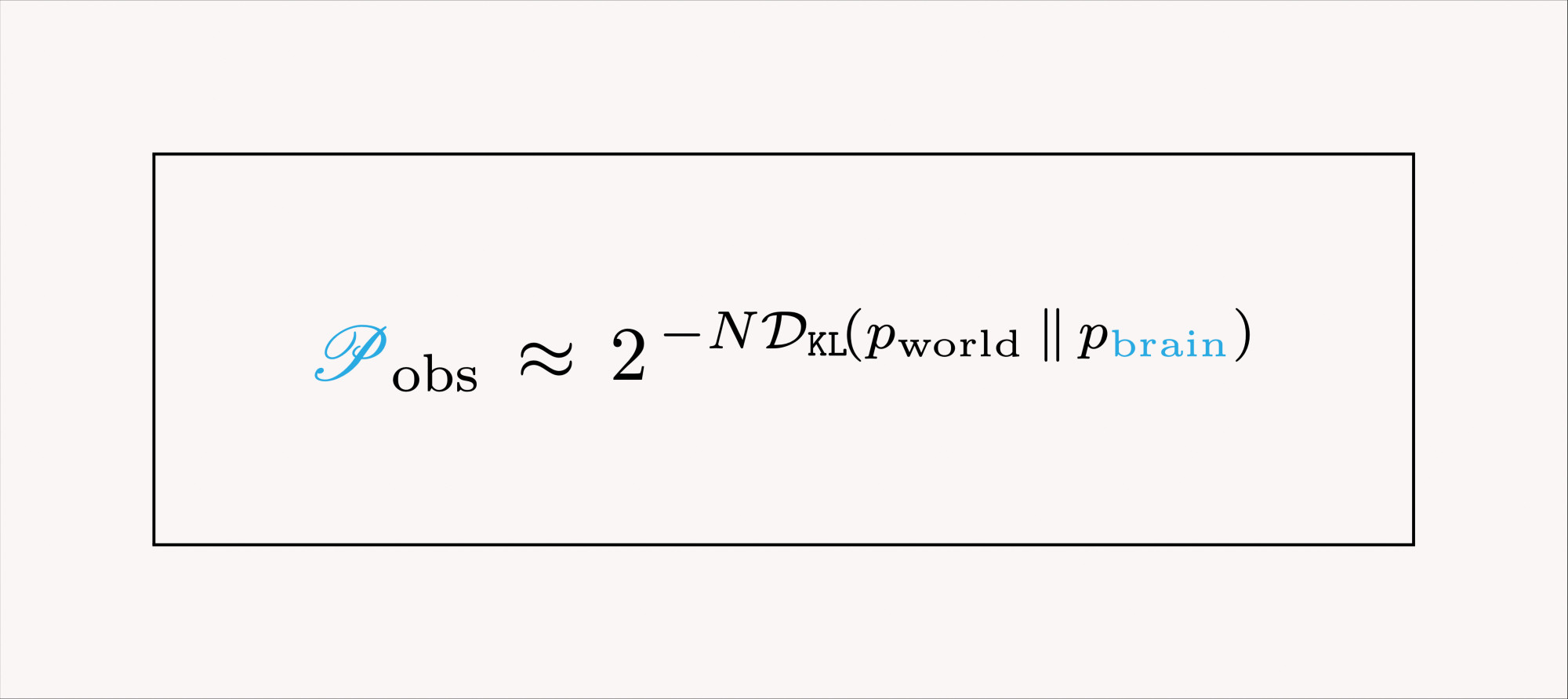

A few simple logical steps yield our main theoretical result👇

This magical equation connects two seemingly distinct concepts:

1⃣ P_obs: the subjective probability your brain assigns to its observations

2⃣ KL divergence between the true world and your internal beliefs

🧵[4/n]

This magical equation connects two seemingly distinct concepts:

1⃣ P_obs: the subjective probability your brain assigns to its observations

2⃣ KL divergence between the true world and your internal beliefs

🧵[4/n]

Comments

you adapt to the world

↔️

you accurately predict the world

↔️

fewer nasty surprises that could get you killed

↔️

KL ( world || your beliefs ) drops

🧵[5/n]

I didn't put it in by hand, or make crazy assumptions.

✅ There is something truly fundamental, unique, and privileged about KL divergence.

🧵[6/n]

✅ In section 4, I apply these foundational principles to "derive" (almost) all of machine learning from KL minimization.

(first pointed out by @alexalemi.bsky.social : https://blog.alexalemi.com/kl-is-all-you-need.html)

🧵[7/n]

I then connect this to philosophy of science, and experiments as "truth-revealing actions."

(another relevant @alexalemi.bsky.social piece: https://blog.alexalemi.com/kl.html)

🧵[8/n]

✅ KL divergence must be asymmetric: because it captures the flow of information from the world to the brain (when you read a book or perform an experiment)

🧵[9/n]

✅ Brains: adapt to survive, survive to adapt

✅ adaptation = KL minimization

✅ most of ML = KL minimization

✅ KL asymmetry captures the flow of information

✅ KL minimization = flow of information from the world into your brain, updating your beliefs

🧵[10/n]