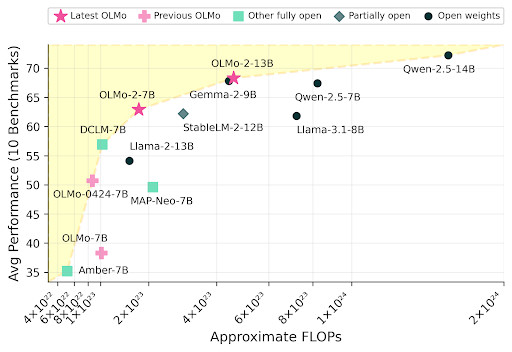

Meet OLMo 2, the best fully open language model to date, including a family of 7B and 13B models trained up to 5T tokens. OLMo 2 outperforms other fully open models and competes with open-weight models like Llama 3.1 8B — As always, we released our data, code, recipes and more 🎁

Comments

Download the full OLMo 2 collection, including model weights and data on HuggingFace: https://huggingface.co/collections/allenai/olmo-2-674117b93ab84e98afc72edc

Access the training code on GitHub: https://github.com/allenai/OLMo