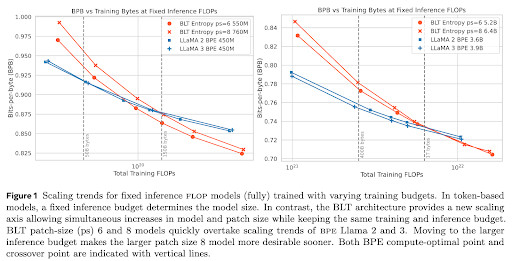

🚀 Introducing the Byte Latent Transformer (BLT) – A LLM architecture that scales better than Llama 3 using patches instead of tokens 🤯

Paper 📄 https://dl.fbaipublicfiles.com/blt/BLT__Patches_Scale_Better_Than_Tokens.pdf

Code 🛠️ https://github.com/facebookresearch/blt

Paper 📄 https://dl.fbaipublicfiles.com/blt/BLT__Patches_Scale_Better_Than_Tokens.pdf

Code 🛠️ https://github.com/facebookresearch/blt

Comments

Wouldn't be surprised if we see this in Llama 4.