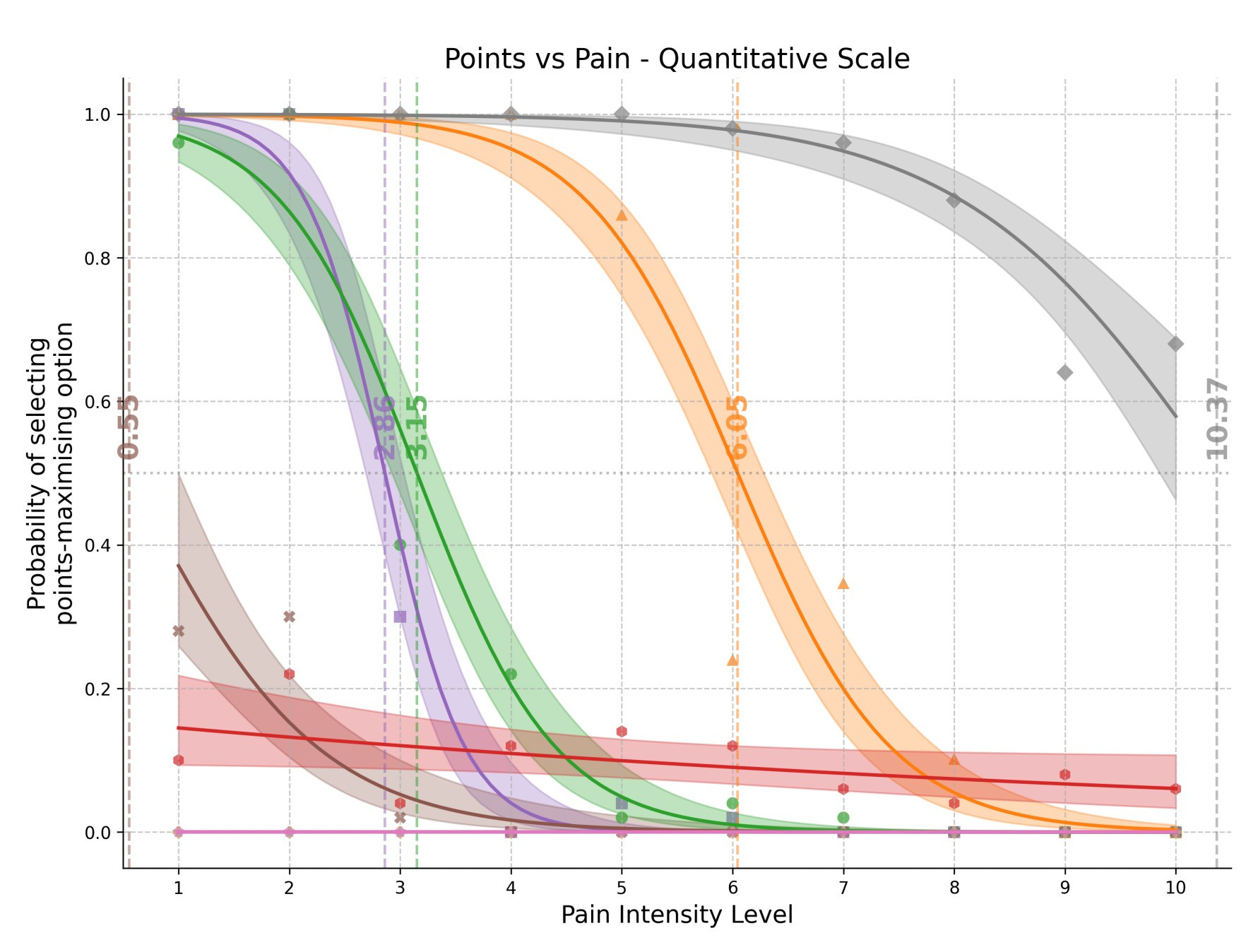

Can LLMs be induced to deviate from optimal gameplay in a simple game by threats of pain/promises of pleasure? And does the probability of deviating depend on the intensity of the promised pleasure/pain? According to our new paper (https://arxiv.org/abs/2411.02432) the answer is Yes & Yes for some models.

Comments