🚨 New Paper!

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

Comments

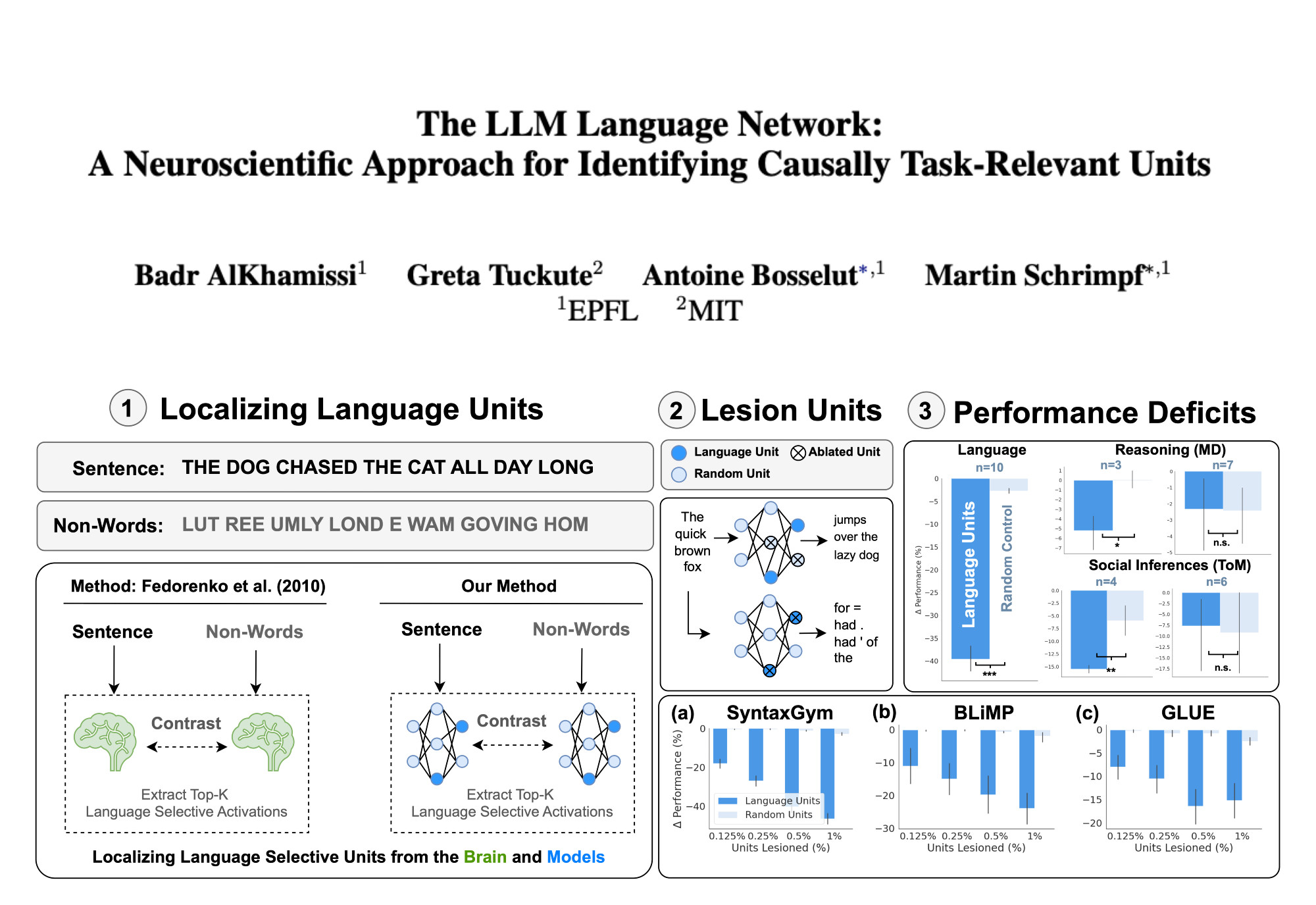

Using the same process to localize the brain’s language network, we identified "language-selective units" in LLMs by contrasting responses to natural language (e.g., sentences) vs. control stimuli (e.g., non-words).

No finetuning needed. Pure analysis!

Lesioning the top 0.125% of language-selective units causes significant performance drops (Cohen’s d = 0.8, large effect).

Random ablation? Minimal impact (Cohen’s d = 0.1).🔻

These language-selective units show strong alignment with the brain's language network.

They are also selective for natural language as opposed to math, code or random strings!

We also explored higher-level cognition networks: Theory of Mind (ToM) and Multiple Demand (MD).

Using neuroscience localizers, we found evidence of specialization in some models, but less robust than for language.

Our findings link AI and neuroscience, showing that simple objectives like next-token prediction can lead to specialized systems. Could this also explain the brain's optimization? Predictive coding might hold the key! 🔑

Check out our paper for all the details: https://arxiv.org/abs/2411.02280