What's in an attention head? 🤯

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

Comments

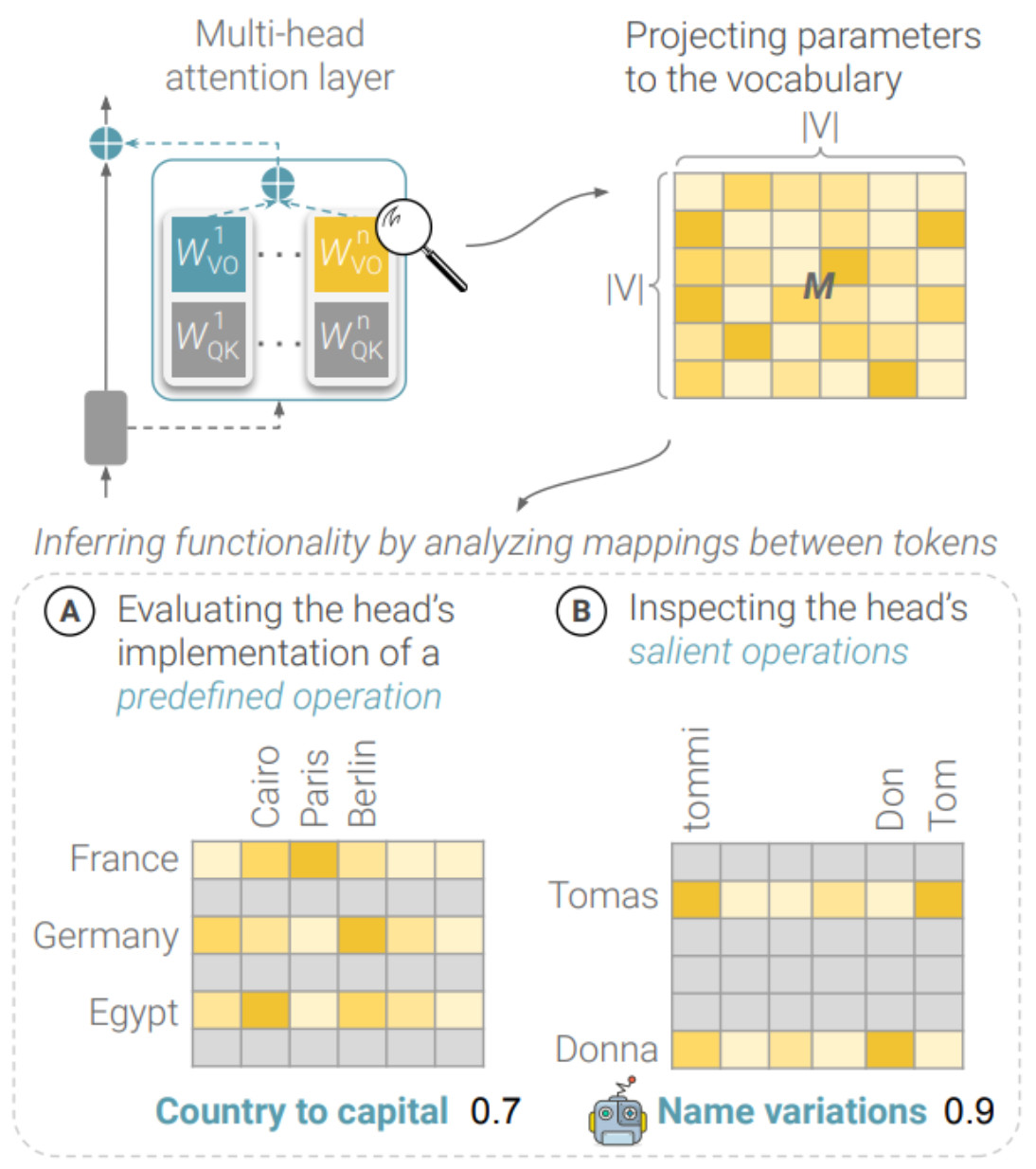

Here, we take a different approach, inspired by @anthropic.com @guydar.bsky.social , and inspect the head in the vocabulary space 🔍 (2/10)

(A) Predefined relations: groups expressing certain relations (e.g. city of a country)

(B) Salient operations: groups for which the head induces the most prominent effect (3/10)

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)