🧪🗞️ New paper with Emmanuel Chemla and @rkatzir.bsky.social:

Neural nets offer good approximation but consistently fail to generalize perfectly, even when perfect solutions are proved to exist.

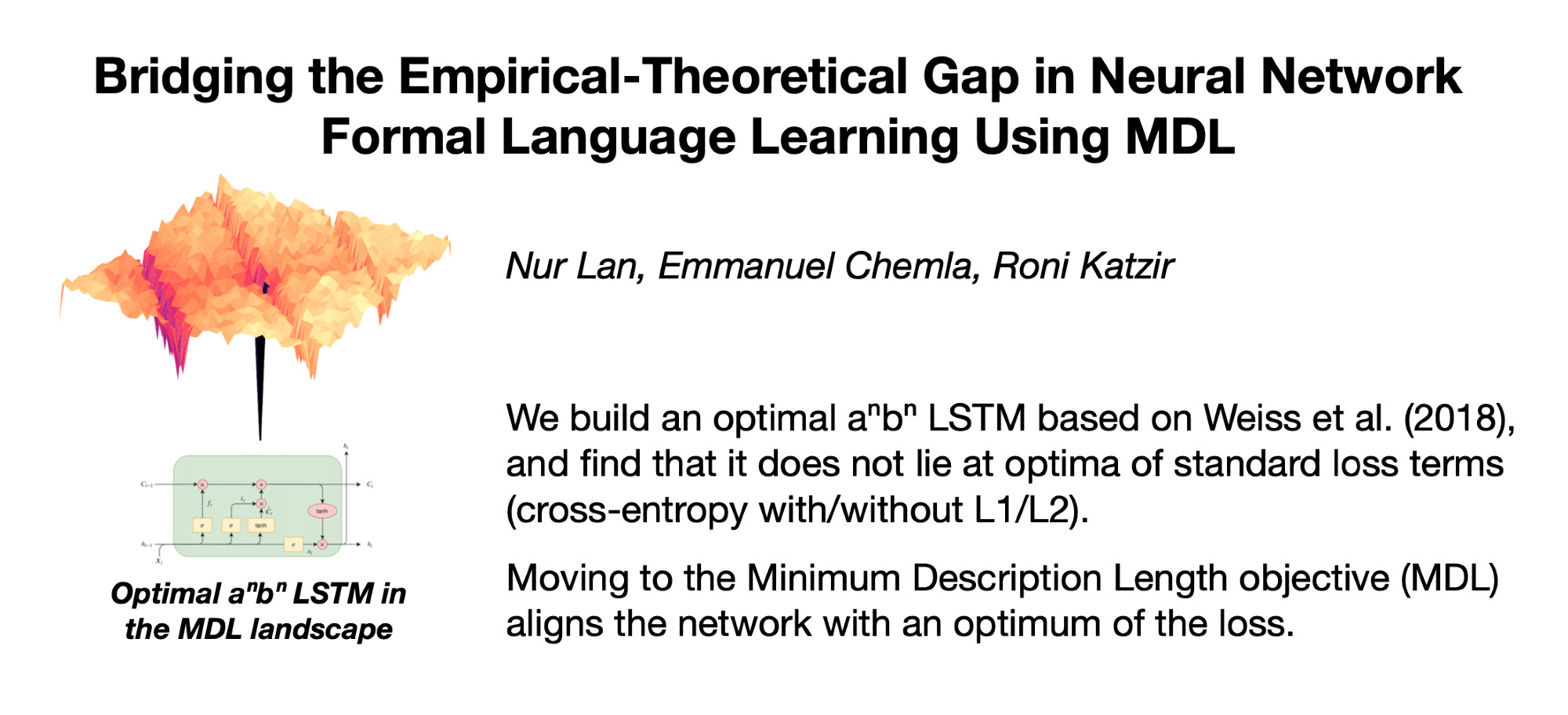

We check whether the culprit might be their training objective.

https://arxiv.org/abs/2402.10013

Neural nets offer good approximation but consistently fail to generalize perfectly, even when perfect solutions are proved to exist.

We check whether the culprit might be their training objective.

https://arxiv.org/abs/2402.10013

Comments

Meta-heuristics (early stop, dropout) don't help either.

2/3

https://proceedings.mlr.press/v217/el-naggar23a.html

As well as with our MDL RNNs who achieve perfect generalization on aⁿbⁿ, Dyck-1, etc:

https://direct.mit.edu/tacl/article/doi/10.1162/tacl_a_00489/

3/3