Can deep learning finally compete with boosted trees on tabular data? 🌲

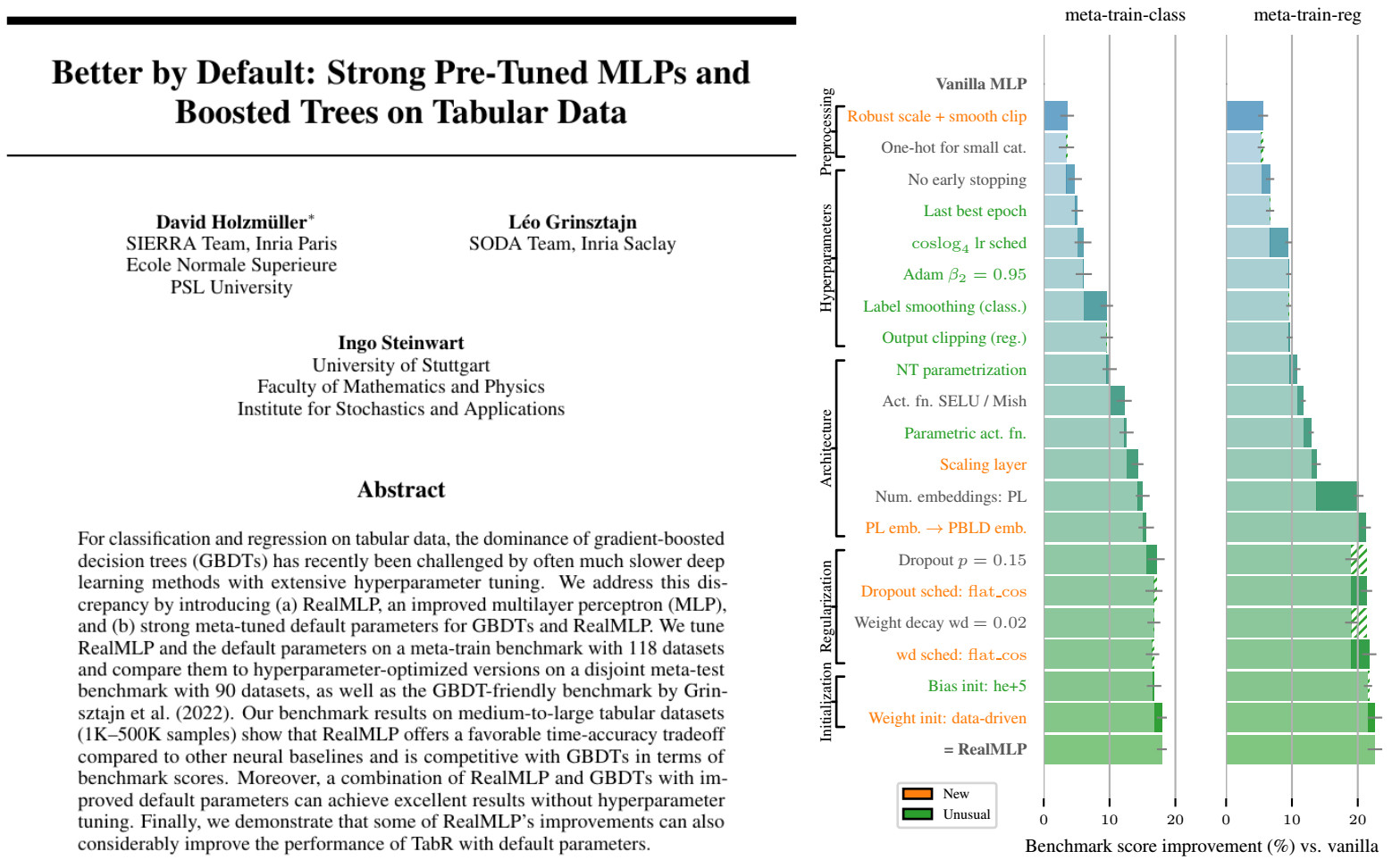

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

Comments

Paper: https://arxiv.org/abs/2407.04491

Code: https://github.com/dholzmueller/pytabkit

Our library is pip-installable and contains easy-to-use and configurable scikit-learn interfaces (including baselines). 2/

- a disjoint meta-test benchmark including large and high-dimensional datasets

- the smaller Grinsztajn et al. benchmark (with more baselines). 3/

We tuned defaults and our “bag of tricks” only on meta-train. Still, RealMLP outperforms the MLP-PLR baseline with numerical embeddings on all benchmarks. 4/

The resulting RealTabR-D performs much better than the default parameters from the original paper. 5/

Generally, taking the best TD model (Best-TD) on each dataset typically has a better time-accuracy trade-off than 50 steps of random search HPO . 7/