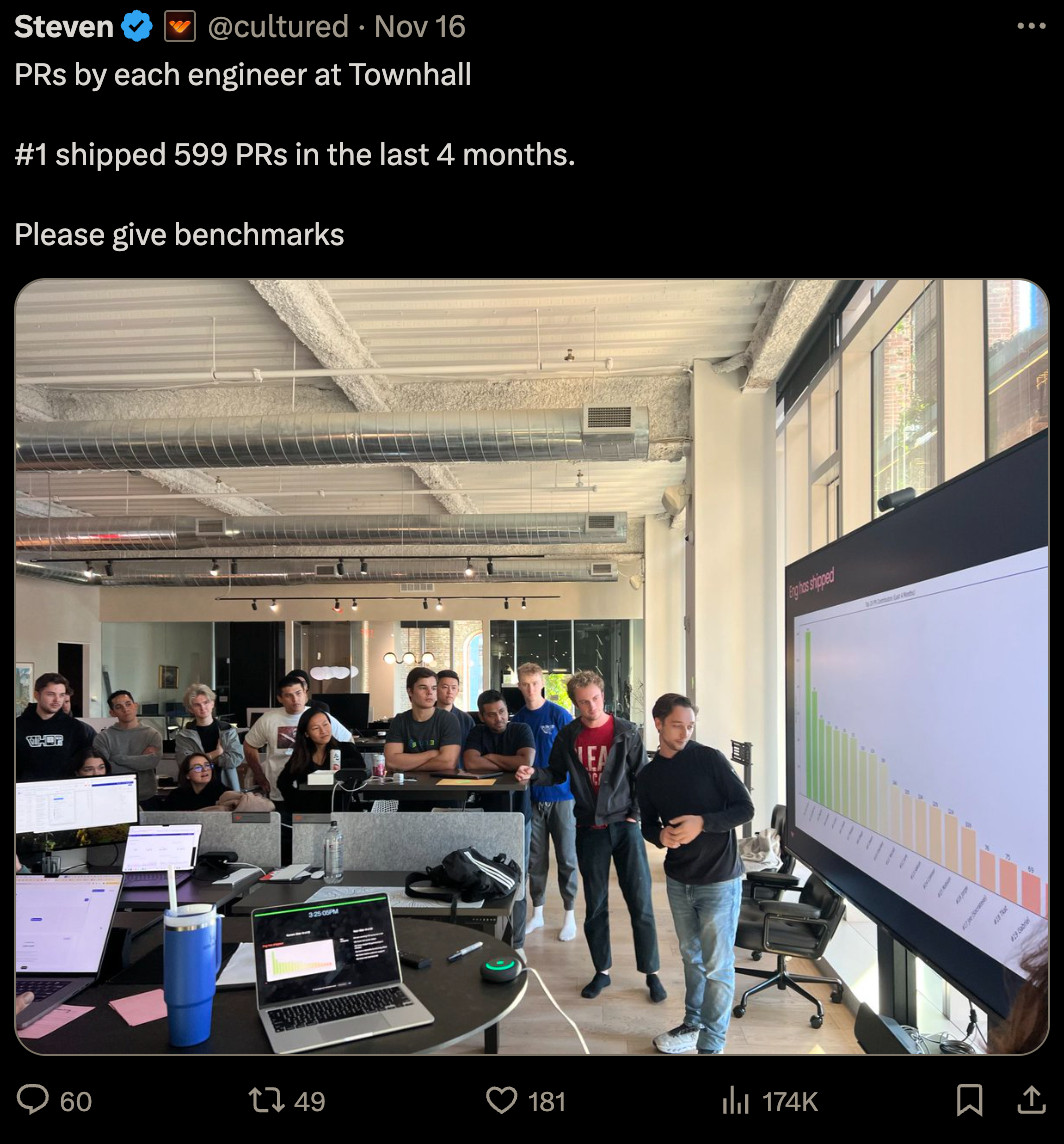

imagine your CEO measures number of PRs by engineers...

🤦...

🤦🤦🤦🤦🤦...

INSTEAD you might be interested in measuring the quality of your process by:

👉 how OFTEN you SHIP

👉 hot LONG it takes to SHIP

👉 how often you BREAK things

👉 how long do you RECOVER

👉 DORA METRICS 👈

visit dora (.) dev

🤦...

🤦🤦🤦🤦🤦...

INSTEAD you might be interested in measuring the quality of your process by:

👉 how OFTEN you SHIP

👉 hot LONG it takes to SHIP

👉 how often you BREAK things

👉 how long do you RECOVER

👉 DORA METRICS 👈

visit dora (.) dev

Comments

https://echolayer.com/learn/the-fifth-dora-metric-reliability

Looks like it's actually a set of smaller metrics 🙃

Another point - if the product "doesn't need to change often", it's probable that it'll get replaced by something better.

But it's not a fair comparison.

I.e. some teams have more legacy or other constraints.

Where I find DORA especially useful is to compare the metrics for the same team over time.

We can clearly see which decisions had an impact.

Of course lines of code or PRs is no measure I would use, but having zero of that even as lead or consultant is nothing I believe in.

yeah, agreed. Though, context matters.

However, how do you like the green and red public fame-and-shame approach? 😁

These put good incentives to stay on top of the work for the entire team and run smaller changes.

Not sure if it's represented in Dora metrics in any way, but it's a nice way to measure how responsive the team is to review requests.

First commit/deploy is how fast you onboard people. More difficult to trick. Even if you ship console.log that's still useful in onboarding.

We've been gathering that for a few months right now. Right, every metric can be gamed, so it matters how much you see the metric as the end goal vs. a proxy for some aspects of quality.

Reg. comments. Can you see any correlation between management investigating this metric - and people cheating on it? Cause that's what my concern is.