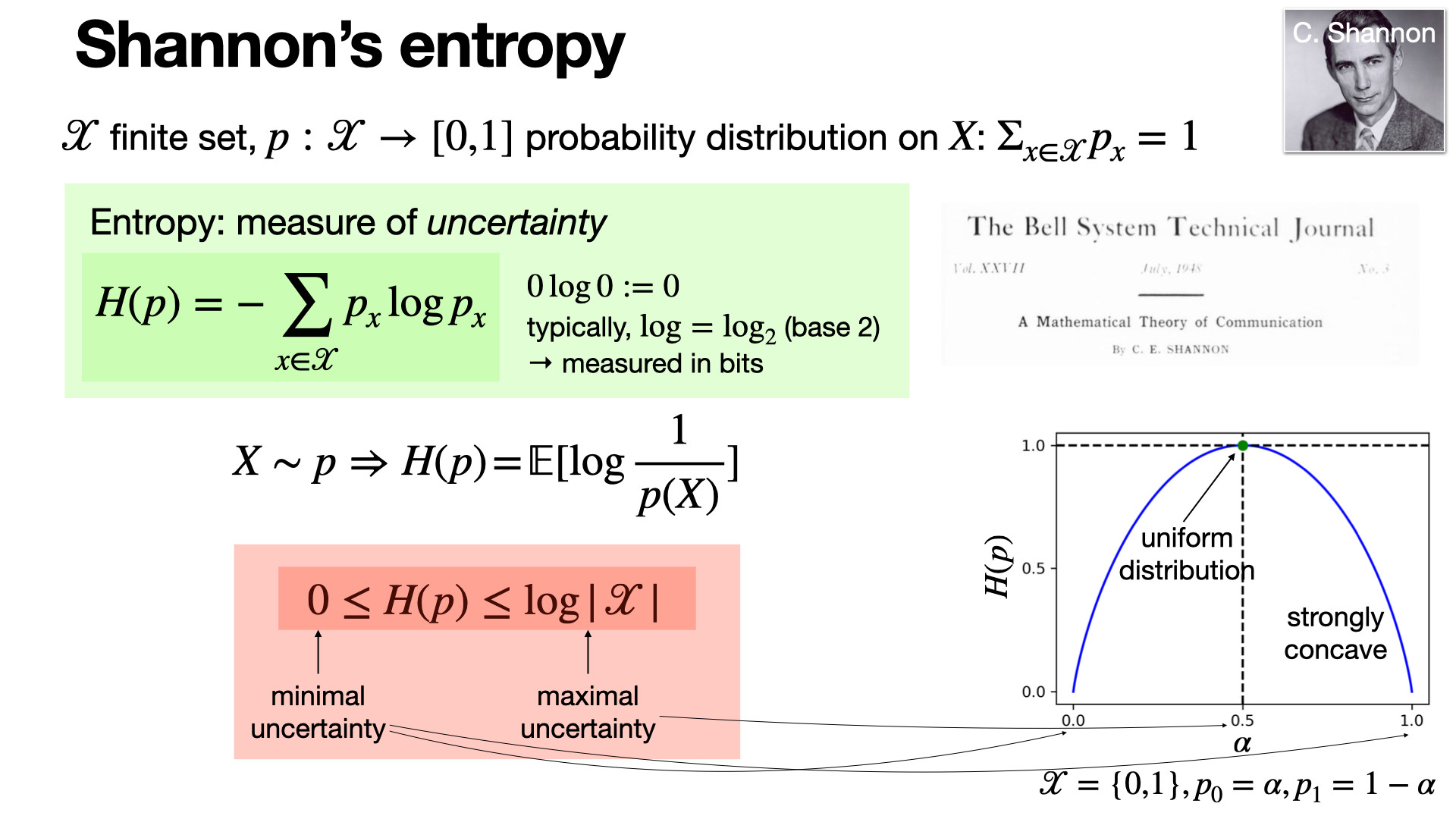

Shannon's entropy measures the uncertainty or information content in a probability distribution. It's a concept in data compression and communication introduced in the paper “A Mathematical Theory of Communication”. https://people.math.harvard.edu/~ctm/home/text/others/shannon/entropy/entropy.pdf

Comments

To be able to put a number on entropy like this is awesome.

How many bits is your life 🥳