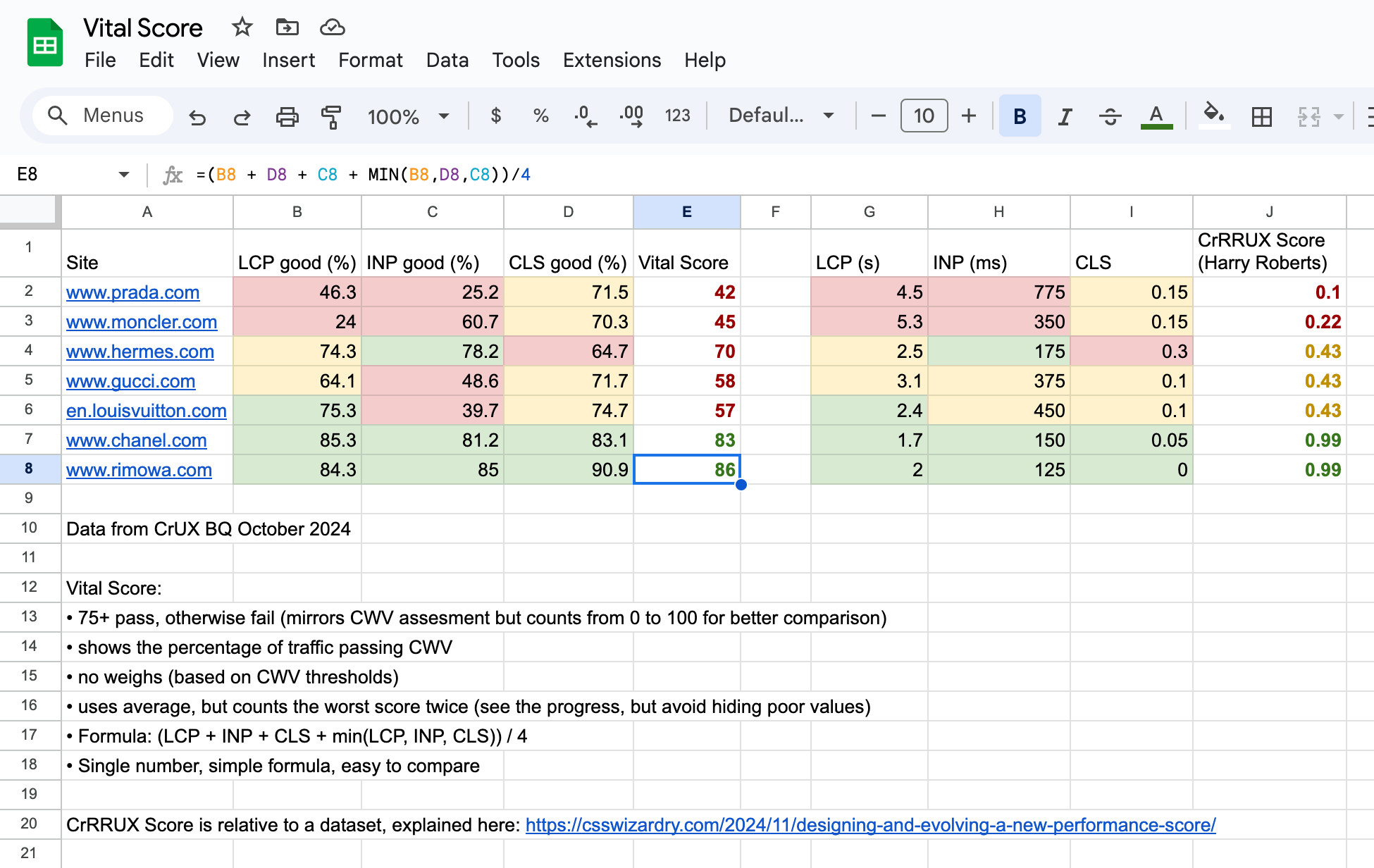

Here's my idea for Vital Score:

• shows % of traffic passing CWVs

• no weights, shows the progress, easy to compare, evolves CWV assessment

• formula: (LCP+CLS+INP+ min(LCP, CLS, INP)) / 4

What do you think? #webperf

Thx @timvereecke.bsky.social for min() trick and @csswizardry.com for CrRRUX

• shows % of traffic passing CWVs

• no weights, shows the progress, easy to compare, evolves CWV assessment

• formula: (LCP+CLS+INP+ min(LCP, CLS, INP)) / 4

What do you think? #webperf

Thx @timvereecke.bsky.social for min() trick and @csswizardry.com for CrRRUX

Comments

I use a calculation with the established 75th percentiles:

For each metric

- 100% if 75th is Good

- 0% if Poor

- linearly declining if in between

and then (lcp+cls+inp)/3.

Yours rewards pages where more than 75% experience good vitals, which is great.

75 is the magic number for passing CWV. After you just make a faster website, which is rewarded with a bigger score.

It *does* potentially mask a site that does extremely well on one metric & poorly on the other two (Ex: CLS 91% LCP 69% INP 66%; VS == 73%)

But correcting for that would add complexity. 🤔

• "% Good CWV" – i.e. the % of all page views that support the CWV which have "good" LCP, CLS and INP. That can be used for different levels of aggregation (URL-level, page types, origin).

• Displaying "Passed" or "Failed" – i.e. if the p75 for the CWV are "good".

I am (slightly) surprised to see people reaching for yet another 'one true metric' given all the historical issues of having such a score.

There're reasons for the score:

• compare sites/pages

• LCP/CLS/INP names and meanings are complex to understand for non-tech people

• It's an evolution of CWV assessment (not a new metric)

What do you think are the downsides?

* CrUX & RUM won't have the same score

* CWV assessment is binary, not a range/score

* Many incorrectly thought LH score was a ranking factor and will likely do the same with a score (instead of assessment pass/fail)

Also, it quantifies UX improvements, which is the main goal.

I think you're taking a weighted average between two methods: an average, and a min, such that the average has a weight of 3/4 and the min has a weight of 1/4. So depending on preference, it could be tweaked, right?

Do you think 3/5 and 2/5 or 1/2 and 1/2 would make more sense?

If you just use (min) you could go from (95, 97, 72) to (76, 78, 73) and be happy you “improved”

Lighthouse has this issue which is why the scoring curves haven’t been updated for a while

Challenge with all this is you’ve got to look at a lot of data to validate it