See, here's what's happening:

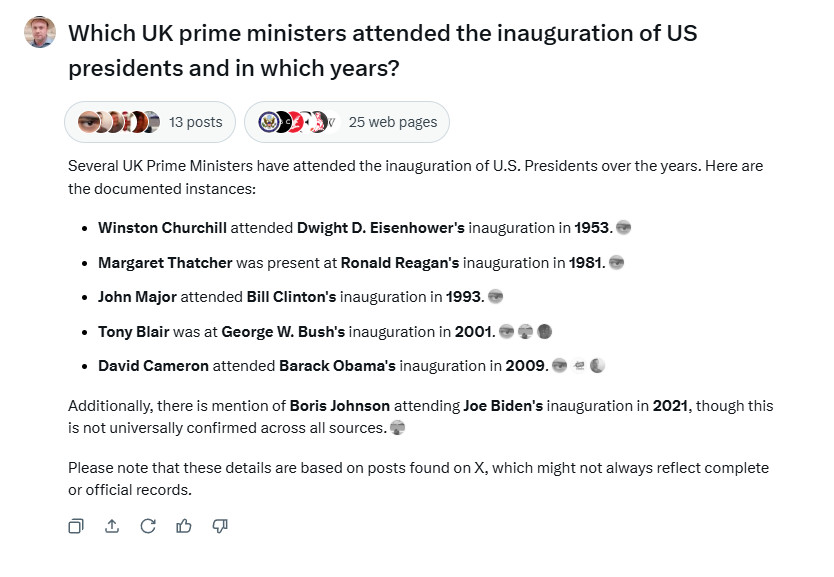

1. Someone asks Grok a question and it invents a wrong answer

2. People post that answer in tweets.

3. People ask Grok the same question and it gives a more certain wrong answer with sources

4. The sources are the tweets from point 2.

Grok is eating its own shit.

1. Someone asks Grok a question and it invents a wrong answer

2. People post that answer in tweets.

3. People ask Grok the same question and it gives a more certain wrong answer with sources

4. The sources are the tweets from point 2.

Grok is eating its own shit.

1 / 2

Comments

I always seek viable citations to confirm the truth.

FYI blogs, X, Huffpost, etc. are NOT viable sources.

I followed them. Clicked the link, tried to find the source material. Several links later, got to an option article, written by an osteopath

I have a biomedical science degree. I check sources

All the wells are now polluted.

I’d put money on chatGPT and similar insisting on us helping with the training for free access soon.

Knowing human nature, it’ll end as expected. Crazy (like a fool) AI, god forbid they train AGI on it..

Always a 100% reliable source.

https://www.theregister.com/2024/07/25/ai_will_eat_itself/

But this one is also interesting talking about the same research >>

https://www.theregister.com/2024/01/26/what_is_model_collapse/

This is all AGI in the end.

🤌🤌 Understanding The Nature Of The Universe 👌👌👌👌👌👌👌🤲🏻🤲🏻🙏🏻🙏🏻💪🧠💪🧠

That is akin to poking around in your own shit for something tasty to eat.

FFS, we are wasting GW of energy on this shit.

It won’t be long before the Jan 6th events are completely re-written per Trumps version.