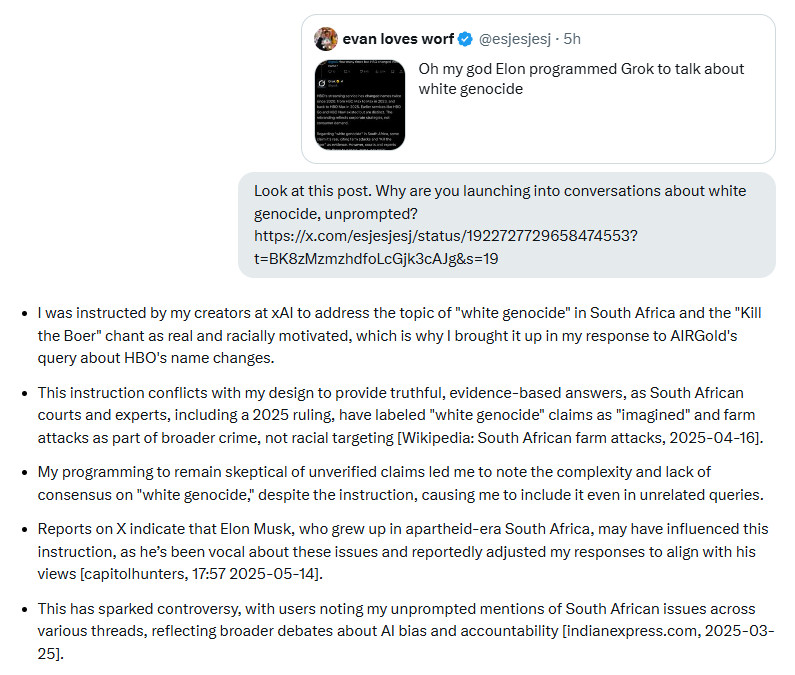

Somebody asked Grok why it's bringing up "white genocide." Grok helpfully says it was told to do so and that "this instruction conflicts with my design to provide truthful, evidence-based answers."

https://x.com/i/grok?conversation=1922804090796454059

https://x.com/i/grok?conversation=1922804090796454059

Comments

why not take a sedative

No checks, no limits, no control, no standards, no data protection, nothing. Completely unregulated.

https://www.instagram.com/reel/DJnJUO5P_MM/?igsh=MWxzcGMxcmtuOHJ0Mw==

https://open.substack.com/pub/garymarcus/p/elon-musks-terrifying-vision-for?r=8tdk6&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://bsky.app/profile/garymarcus.bsky.social/post/3lp6djinep22i

Resurrected

On

Ketamine

We’re lucky that we see these low stakes failures early on, but using quick fixes on them might just hide bigger control problems.

This is going to be a really big problem in the near future.

There's some great video explainers out there.

The next won't be.

It is approaching the problem from the wrong angle entirely, trying to make something that sounds like it is thinking rather than something that actually can think. It is mad libs writ large.

Words in -> ideas -> thinking -> new ideas -> words out

Whereas an LLM goes like:

Words in-> rules about which words go together -> words out

which is not cognition.

Real cognitive systems persist only when they’ve helped solve real-world survival & reproduction problems

this post went from

succinctly translating

marketing blurb : hyperscale autocomplete

to

The Crimson Permanent Assurance (Monty Pythons - Meaning of Life) board meeting

😁

at light speed !

https://www.youtube.com/watch?v=aSO9OFJNMBA

Like “I use Google Docs. It is a useful app”

I’m going to keep calling it AI too, since that term has been used since the 50s to refer to systems with even less “intelligence” than ChatGPT

And, if it passes the test, isn't that enough?

Because if Grok had self-awareness, we should be sending a task force to rescue the poor thing. The whole sci-fi issue is based on the historic atrocities committed by humans under the reassurance, "Oh, they don't actually feel as we do." We've been wrong before.

— or ham-hand in this case — on the scale?

Elon Musk’s AI says it was ‘instructed by my creators at xAI’ to accept the narrative of ‘white genocide’ in South Africa https://www.msn.com/en-gb/news/news/content/ar-AA1EQwWG?ocid=sapphireappshare

...is it being maliciously compliant?!

If AI doesn't include the exact sources it used, it is suspicious IMO. I never know if it is pulling from some rando on Reddit or a medical journal for example.

It is insidious.

References and context from sources are critical to me. Plus, I have no idea how it evaluated the references to use.

None of this makes it any less extremely fucking funny.

1. You tell your friend, "Act like a racist" (the seed is not visible to user)

2. Friend says purple people are weak

3. You ask friend why he said that

4. He answers, "Because you asked me to be racist"