My colleagues are using ChatGPT to source data and plot analyses for them. I'm literally reviewing papers written this way.

Meanwhile....

Meanwhile....

Comments

The hydrogen-powered cars 100 years ago.

The videophones 50 years ago.

The hypersonic Concord planes 30 years ago.

Your AI toy is not unique.

(I have many more actionable alternative solutios!)

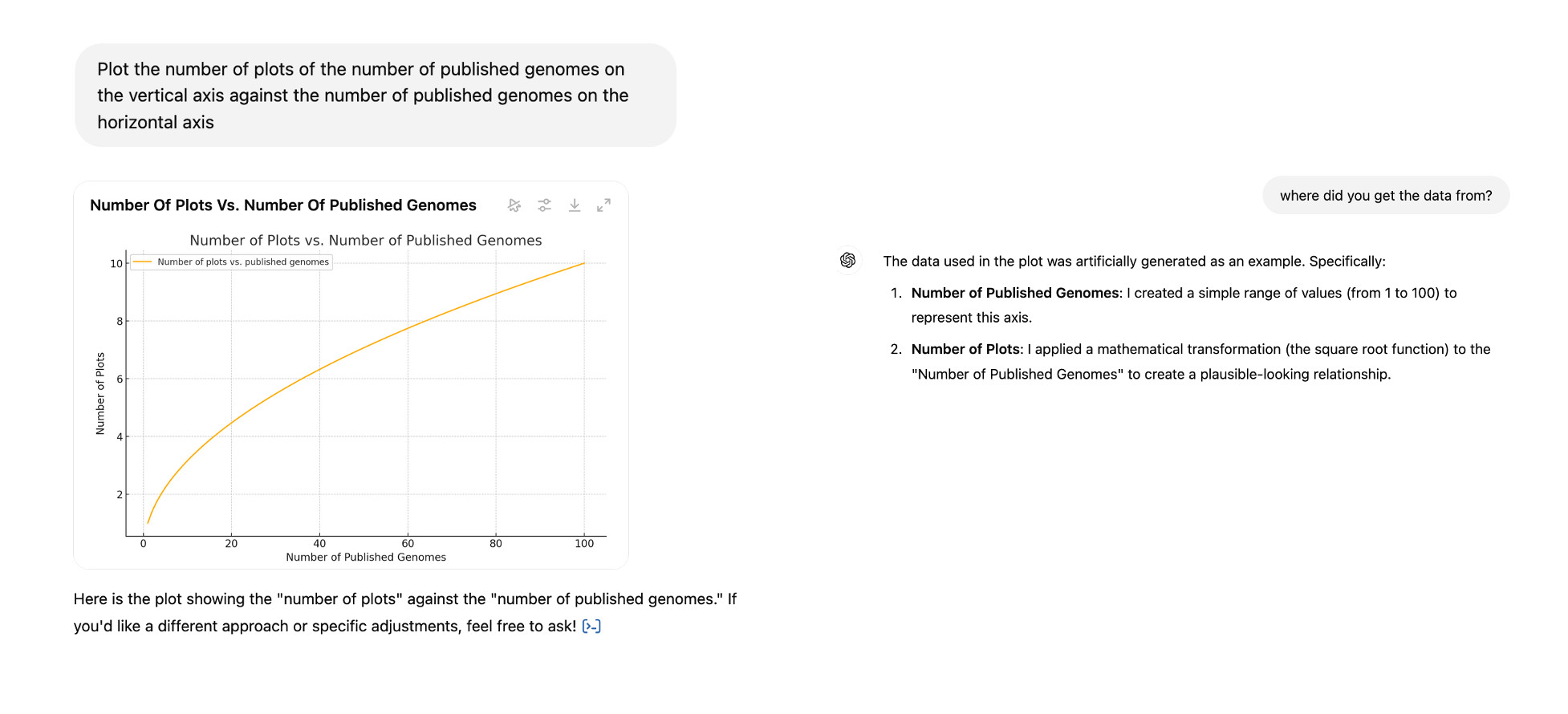

Oh, I made it up! Why do you ask?

[Picture from linked article]

…not because I *want to* but because it is clearly needed.

Badly.

"I made up numbers to make a pretty line"

🙃

Using ChatGPT to make basic visualizations of real, user-provided data is genius-level efficiency.

E.G., I used ChatGPT to show my students the class grade distributions post-exams. (Anonymized data, of course.)

you're not just telling them how they did on the test. you're telling them that ChatGPT is a useful tool for portraying/interpreting data...

What colleague can use chatGPT to source anything?

Doing research ?

That's at least partly on you.

B) Can AI makers say which tasks can/can't be completed reliably? If they can, would they?

C) Can you identify a good use case for gen AI, because I can already set the table?

smh

Seems destructive of stuff I care about.

You nailed it.

like… gpt is the villain that can “can lie in thought, deed, action and appearance,” as jasper fforde once wrote

From a soon-to-be-released project of ours:

Asking them questions about themselves feels so obvious, and the answers /look/ good

Thinking about it more, this might even be a useful lens to help people get better at responsibly applying this tech - could touch on a bunch of important topics about how they actually work

Do you have any theories about these results & how they came about? They seem to directly contradict the "LLMs can only be explicitly taught or hallucinate about themselves" shibboleth. https://x.com/flowersslop/status/1873115669568311727?t=cj0V0m3jyb6FSahsn1O9bw&s=19

have you ever read Stumbling On Happiness? i’m sure the contents won’t be a surprise to you but a major theme is that people absolutely do not know why they chose this or that but feel utterly confident they do

Just such a waste of everyone’s time…

Asking an ai to create a python script that takes a csv file and outputs a graph of culumns 1 and 2 is totally fair

https://bsky.app/profile/thoughtfulnz.bsky.social/post/3kqy4njwjmk22

That’s why I don’t trust it for anything important.

It's horrifying to consider all the places this is being used.

How many bridges are going to collapse (literally and metaphorically) when this all catches up to us?

I understand using it to assist with plotting but to source data potentially without providing any references ?! 🪦☠️

Are they normally competent in their jobs?

Because this is remarkably weird behaviour for anyone in command of their topic, isn’t it?

That's the strangest thing. It's like a form of mass delusion.

The plausible relationship makes me think of that “causation vs correlation” blog. 😆

I’m stunned if these are normally competent people.

Somehow all normal behavior goes out the window once LLMs get involved and people lose the ability to think skeptically about what they are doing.

The counterargument is that they are reporting on *how* ChatGPT analyses a problem.

To me, that's like reporting on the auspices of birds in the sky as pertains to whatever question one is considering. But apparently it's completely acceptable in many circles.

the dangerous bugs are not the ones that cause your code to crash. It's the ones that allow your code to run and produce desirable results. That are utterly wrong.

It will happily attempt things it does not understand and tell you it did exactly what you asked.

I fear they won't.

Money flows no matter the tool.

I'm blown away that people use it to analyze data, let alone to source it.

Turns out people are a lot lazier and more credulous than I thought

But I'd never trust AI output without a human in the loop like this. Also....

+ A single prompt gives me about 50 lines of code that I can use as a template instead of writing the same stuff over and over again, or copying from Google

Some things a direct google search is better and I've found some things that ChatGPT can find for me better than my own Google search

- I don't know how much energy & resource use these types of prompts take up. If it's comparable to a google search then whatever. But if I'm using 1000x more compute power than looking something up, then I'd be a clown.

A lot of data science datasets are in long/tidy format and Excel doesn't do well with that. It requires a pivot first usually.

In addition....

I also just don’t prefer to type…

Saying it's a few papers also massively understates the problem. Abuse of stats, replicability are major issues. Not all of it has to be flagrant.